I bought a "dead" 1551 drive a few months ago. It's a european model, only 220v, and they said "it smoked when it was on". Well, i figured it was dead but I figured maybe not dead dead.

So, step one was removing the .. huge ass transformer.

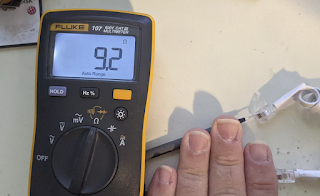

I stripped off the regulators and hooked it up to a pair of bench supplies to feed 5v and 12v with a current limit.

(And yes, by this stage I had done a bunch of debugging already, so i had taken out the ROM, PLA and RAM and socketed the PLA / RAM.)

I unplugged the drive head cable and measured it - the heads measured just fine. So, I left them off whilst I debugged everything else.

Then I fired it up - the drive spun, a bunch of CPU pins were blinking on the oscilloscope, but nothing quite worked. The drive constantly spinning is a sign that even the early boot code isn't running.

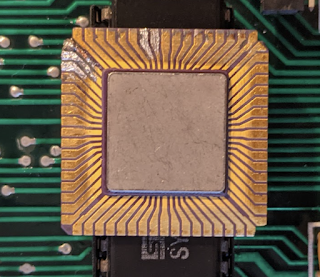

So I then wanted to know if the CPU worked. The problem? All the pins kinda looked fine. Except A15.

It looked very sus. Like ok, NMOS has some fun rise times, but the other pins weren't this fun.

I decided to socket everything so I could pull the RAM and address decode gate array out. I thought about the 42 pin drive gate array IC but I figured if that was fried I was in for an unfun time.

The next thing was to figure out how to test that the CPU kinda worked. I figured I could write a little program to toggle the activity LED quickly and see it on my scope. I started with the disassembly here - http://www.cbmhardware.de/show.php?r=7&id=21 - to see how the LED is blinked. The 6510T CPU has an 8 bit IO port (versus the 6 bit IO port on the 6510 CPU).

The program looked roughly like this:

.org $ff00

SEI ; disable interrupts

CLD ; clear flags

LDA #$6F ; IO direction bits

STA $00 ; program IO direction bits

loop:

LDA #$60 ; turn on LED

STA $01 ; program IO port

LDA #$68 ; turn off LED

STA $01 ; program IO port

JMP loop

.org $fffa

.byte $00, $ff, $00, $ff, $00, $ff

This didn't work. So, I got a new CPU. A15 on that CPU was much better.

Then this did work. The LED was blinking at a few hundred kilohertz. Ok, but the original ROM? Nothing. My guess is the ROM is also busted, so I programmed another 27128 EPROM with the right image and inserted it into the drive.

Everything worked! So, time to plug in the head cable OH CRAP I PLUGGED IT IN BACKWARDS. Bang, I blew the head coils. Crap.

Anyway, the rest of the drive seemed fine. Stepper motor, drive motor control. Now, the challenge - it's a mitsumi drive. I went on ebay to find a replacement drive - lots of "untested" 1541's everywhere. But I did find a "tested, guaranteed works" SX-64 drive. However, it's an Alps drive. They're supposed to be more reliable, but .. well, it arrived a few days later.

The main physical difference between the two is the connector. The thing I remember is that the write current is slightly different and some 1541 drives have a jumper to change said current.

Luckily there's a 1541 service manual and it has the Alps drive pinout for the 1541, which is surprisingly exactly what I need for the 1551:

So I followed this guide, verified at each step that it worked, and connected it all up.

And yes, I put superglue in the key hole for the head connector so I didn't plug it in backwards.

And .. well, it works!

Well, kinda. I had the commodore 16 drop into the monitor after HEADER (which formats a blank disk) completed. I've also had some issues with commands hanging and not running on the drive. So, there may be some other issues lurking.

I also want to figure out a suitable mod for the write head current change.

But hey, I guess I do have a working Alps drive in my 1551.

And yes I did tear the head apart to see how it's put together... :-)